Latency in Web Hosting and How it Can Affect Your Business

Connectria

Author

Date

March 1, 2022

Latency is always at the forefront when discussing or researching infrastructure services. But what does it mean? This post will explain latency in web hosting not just between websites and users but also between different components of the underlying infrastructure.

I will explore how millisecond delays can add up to real costs and how Connectria’s hybrid cloud can help. If latency in your infrastructure is a concern or problem, it’s definitely one you want to solve. First, it’s helpful to understand what latency is and why it’s important.

What is Latency?

Speed is fundamentally the name of the game when it comes to infrastructure. Latency is the time it takes to access data in a network. The time is typically measured in milliseconds (ms) or microseconds (µs).

Essentially, it’s the total amount of time it takes for your computer to access its network, pass through whatever media it’s using to connect to the internet, and access the host that it’s attempting to contact. Along the way, it will likely pass through a number of routers, switches, etc. All of these things impact latency and, in most cases, increase the time it takes to arrive at its final destination.

Latency has always been the biggest challenge because it impacts application usability. Each step the data packet must travel has an impact. Think of latency in web hosting or hybrid cloud as a train leaving the station. The goal is to get to your destination – in this case, your infrastructure assets – as soon as possible.

Latency starts with the connections between users and applications followed by connections between different applications, workloads, and infrastructure in a data center, all of which are equally important. Next, I’ll discuss the causes of latency and why they matter.

Understanding Latency

Ultimately, latency impacts an end user’s application interaction. If the latency is too high the user interface will become sluggish, and the user will have a bad experience. The closer the user gets to Local Area Network (LAN) speeds, the more likely the user interaction will be successful. Depending on the way some applications perform operations, the latency can be exaggerated.

For example, there are some applications where 1 millisecond of latency between the database and the application servers equates to 1 second of latency for the end-user interface. In these situations, the value of having low latency connectivity is critical.

There are a number of factors that can contribute to latency in a data center, including:

- The distance between servers and users

- The number of routers and switches between servers and users

- The type of traffic on the network

- The number of hops between servers and users

Typically, for every 100 kilometers of distance between a data center and the public cloud, you can estimate that it will add 1ms of latency to your connection. Data packets take different paths through the network before reaching their ultimate destination. If an administrator doesn’t take steps to ensure all packets stay on the same path throughout the entire trip, then there may be delays within individual hops that cause latency issues later on down the line.

Latency and Connectria’s Hybrid Cloud

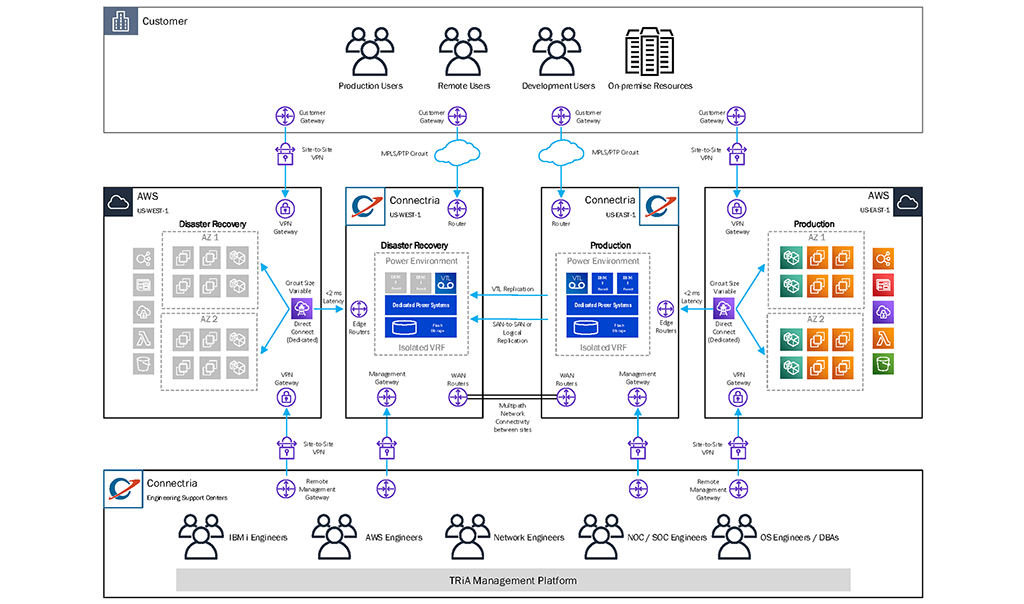

The one thing that can mitigate latency is moving the computing workload closer to the cloud computing environment. Connectria has purpose-built data centers next to the public cloud infrastructures in the US-East-1 and the US-West-1 regions. By positioning the data centers in those locations, we have eliminated distance as a factor in the latency.

For example, the distance between Atlanta, GA, and Ashburn, VA, is approximately 640 miles or 1,030 kilometers. Using the rule of thumb described above, we could estimate the latency of about 10.3ms. To validate this, I compared this to some of the network as a service providers, and between those two locations, the latency is approximately 11.9ms to 12ms. Distance has one of the biggest impacts on latency between workloads.

Connectria’s hybrid aligned data centers in the east and west are able to provide sub-2ms latency into public clouds like AWS, Azure, Oracle Cloud, and Google’s Compute Cloud. This means that the Power Systems hardware, which typically runs ERPs and similar line of business (LOB) applications, can participate with the public cloud and not have latency as a concern when moving to the cloud.

Read our article How to Leverage Connectria’s Hybrid Architecture

Don’t Let Latency Impact Your Business

Connectria is a global leader in cloud hosting and managed services for IBM, AWS, Azure, and more. Therefore, Connectria’s hosted application environment is well suited to help solve problems caused by latency. We offer a hybrid cloud that allows organizations to keep control of their data center while also taking advantage of the public cloud, which means data generated by an organization does not have to travel as far before it can be consumed.

Connectria works with customers who want to use cloud technology but don’t necessarily want to take on the responsibility – or cost – of building and maintaining a private or public cloud themselves. The result is a solution that is perfect for organizations looking for speed, agility, and affordability without sacrificing performance.

Connectria can help you solve your latency in web hosting problems quickly and affordably. It’s our mission to make hybrid cloud hosting easy for businesses around the world, so contact us today to learn more about how you can save money while also increasing performance for your business or application.

Keep Reading

Prepare for the future

Tell us about your current environment and we’ll show you the best path forward.

Fast track your project. Give us a call.